Well, the short answer is .093 seconds. That’s about the shortest amount of time mathematicians need to generate a full analysis of a sound’s component frequencies.

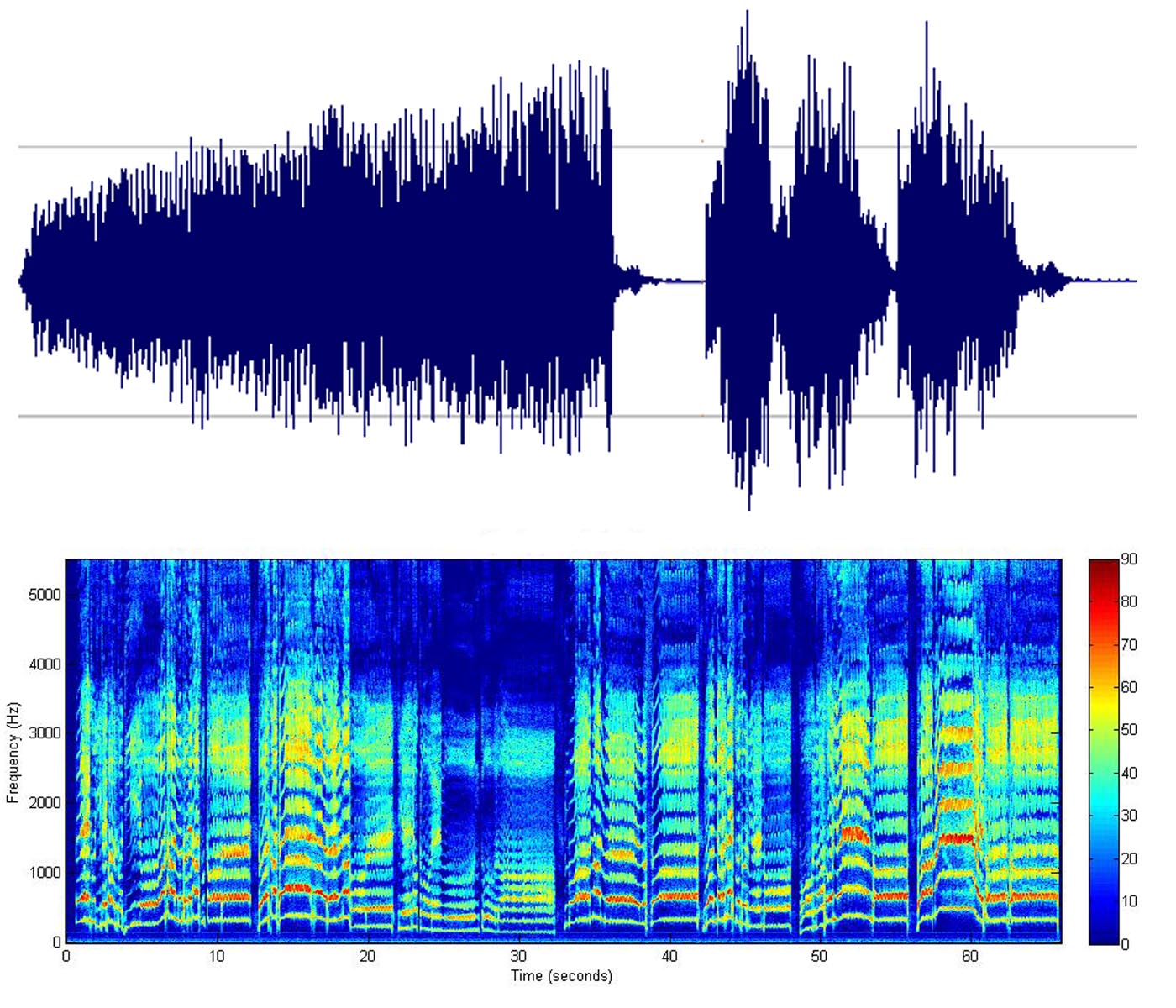

On an even smaller scale, computers typically store sound information in 44100 samples per second. This makes up the typical waveform view of sound that most are accustomed to seeing. However, each sample only gives information about amplitude (or volume), which is a pale portrait of sound. Sound in the physical world is essentially an unfolding of waves over time. Therefore, when translating from physical to digital, frequency information over time is essential to give a meaningful atomic definition of any sound.

Armed with the calculus technique of the Fast Fourier transform, mathematicians typically take the amplitude values from a mere .093 seconds of sound and draw a complete audio portrait. This portrait consists of the volumes of each component frequency that makes up a complex sound.

Thus, the Fourier transform is the key tool for spectralists, a loosely related group of composers and scientists whose goal is to analyze and resynthesize sound using sound’s most basic digital elements. Spectralists literally rip apart sound into its tiniest grains and develop diverse strategies to reconfigure those microsounds into a new sound barely resembling its original form. Between the two poles of granular analysis and synthesis, musicians have only begun to chart a new world of expression.

Spectralism emerged in the late ‘70s within the gravitational pull of France’s IRCAM, an institute of electronic music founded by Pierre Boulez. Early spectralists expanded on the technical advances of musique concrète and brought that tradition into the digital realm. Today, digital tools as ubiquitous as reverb fundamentally depend on spectral analysis. Spectral disciples build, share and critique each other’s patches on labyrinthine message board threads. Using the fast Fourier transform (FFT), contemporary spectralists like William Brent can analyze sounds in real-time and program computers to generate intelligent musical responses to a live musician’s improvisations on the fly. The FFT’s ability to analyze in real-time without stressing a computer’s load has made the FFT the key tool in the field’s general shift towards live improvised granular synthesis.

Although the precise definition of spectral music is contested, spectralists generally compose with close attention to sonic texture and use audio analysis as an instrument in itself. Their work is often definitional and dry in presentation, perhaps because such heady mathematics lies behind spectral techniques in addition to a genealogical relationship to formal traditions like concrète, serialism and process composition. However, spectralism is an immensely broad field with applications in every musical context.

One of the foremost hive-minds of spectralism today is at the University of California’s San Diego campus. Their computer music department includes giants of computer music like Tom Erbe, the developer of Soundhack and Miller Puckette, one of the developers of Max/MSP and its open-source, paired down cousin, Pure Data. This department is unique in that it finds a middle ground between strictly scientific audio math departments and open-ended composition programs.

Miller Puckett’s Pure Data (PD) is perhaps the most accessible route to basic spectral techniques since it is a free, open source software with a library of Fourier related commands (among an army of other sorts of commands) conveniently built into its language. Originally, PD was developed as a basic music programming environment that would “include also a facility for making computer music scores with user-specifiable graphical representations.” As a “dataflow” language which uses visual objects as programming components, it is intuitive to learn compared to many other similarly equipped languages like Supercollider or Python.

Although it is clunkier than Max/MSP, PD is an incredibly powerful composition program with limitless programming possibilities. With its direct links to the C language, anything that is not already convenient in PD can be constructed for the language in C; this direct connection has led many amateurs to create free downloadable “externals” that expand PD’s toolbox. While a music software like Ableton may be slicker and more user friendly, Pure Data externals inherently show the inner workings of patches and are available for tinkering to anyone who takes the time to understand them.

UCSD professor Tom Erbe’s Soundhack is today’s most powerful spectral effects processor. With the ease of manipulating an effect pedal, Soundhack can “convolve” two songs together into a mutated hybrid. With its “spiral stretch” effect, Soundhack turns sound into malleable plastic. Soundhack is especially wonderful because as powerful as its effects are, it uses techniques almost completely ignored by others and produces sounds unlike anything else. Erbe has an intimate knowledge of the subtle qualities even of vintage electronics, and uses complex weighting strategies to digitally approximate complex analog behavior. Among plug-in developers for any software and especially for Pure Data and Max/MSP, Tom Erbe works at the highest level of bridging esoteric math to musical intuitions.

Although Soundhack is available in completion for Max/MSP, William Brent, a recent PhD graduate of UCSD’s computer music program, has been working to translate Soundhack’s complete effects package part by part into an external for PD. This tribute to Brent’s teacher is an important step in Erbe’s general process of prototyping plug-ins for Max/MSP and then making them available as open-source on Pure Data.

Besides his translational pet project, William Brent’s work for Pure Data is quite extensive. Brent’s TimbreID is one of the finest examples of the definitional powers of spectralism for music making. With the Timbrespace patch, included in TimbreID, PD spectrally analyzes any given soundfile and graphs each grain of sound according to user-specified criteria. The user can then use his cursor to play each point along the graph. Timbrespace is at once a rigorous audio classification device and an electronic instrument. The program is especially suited to processing percussive music - since each drum hit is a separate unit approximately the size of the analysis window, reordering and resynthesis with percussion seems fluid and natural. In one of TimbreID's most pristine exercises, William Brent has a cellist perform a wild cello solo. TimbreID can then play back that cello solo but with all of the sounds placed in order of timbric similarity; this example is available below.

While William Brent’s work has largely served to refine and unify various audio analysis techniques, one new PhD candidate at UCSD is trying to destroy some of the field’s norms. Joe Mariglio has set his aim to shoot down one of the most prominent tools of spectralism: the Fast Fourier transform. Since a FFT simply provides an amplitude value for each component frequency of a sound, it provides no information about the shape of a sound wave; FFTs always renders frequency as a sine wave. In the physical world, sound waves are infinitely more nuanced. “I value rough, grainy, transient rich textures. I think sinusoids are fairly overused to produce textures in computer music, and partially due to the work of composers like Curtis Roads, and mathematicians like Stephane Mallat, I've found several alternatives that seem to work better for me. While Fourier theory provides us with a way of constructing sound out of sine waves, wavelet theory provides us with a way of constructing sound out of anything. To me, this means I can have an infinite palate of microsounds to paint with.” One of the finer examples of Mariglio’s delicate timbric touch is a piece made by placing a piezo microphone on Brooklyn’s M train risers and feeding the train sounds through a granular synthesis process. More information is here.

To diminish the near universal usage of the FFT, Mariglio hopes to make the technique of the wavelet transform more accessible. Real-time wavelet transforms are available in Matlab, a program directed toward engineers and physicists. One of the major tasks to make the wavelet transform viable as a spectral technique is to make it more readily accessible and known to creative people. Mariglio is currently building a library of commands for C that provide real-time wavelet synthesis. Eventually, he plans to transfer these commands to Pure Data. This multi-step process of popularizing wavelets as a means of granular synthesis is one of the main goals of Mariglio’s PhD work. His rejection of one of the fundamental tools for spectralism is also evidence of the expansive future possibilities for the field. “As far as tools that are currently widely available for fast feature detection, the FFT is the state of the art. It irritates me that this is true, and I know I'm not the only one. I predict that we'll see some new research coming out that implements wavelets and other so-called sparse representations in real-time, with creative people as their target audience.”

Nat Roe is a DJ with WFMU and the editor of WFMU's blog. He has contributed in the past to The Wire, Signal To Noise and the Free Music Archive. Nat also cooperatively manages Silent Barn, a DIY venue in Ridgewood, Queens.