This essay accompanies the presentation of I/O/D's The Web Stalker as a part of the online exhibition Net Art Anthology.

Click here to use this work in emulation.

The storage capacity of a floppy disk weighed in at a massive 1.4 megabytes in the 1990s. Can you imagine what to do with that much power? In 1994, trying to answer that question, Simon Pope, Colin Green, and I started to create an "interactive multimedia" publication that would fit onto a high-density floppy. We called ourselves, and our publication, I/O/D, which stood for a few things that we would make up on the fly without being fixed to any of them. We gave copies away for free, by post and at events (smaller ones at places such as 121 Bookshop in Brixton, or at events such as “Terminal Futures” curated by Lisa Haskel at the ICA or “Expo Destructo,” in the center of town) and it found its way onto bulletin board systems and Usenet, where it had to be divided up into 32kb chunks. We spent a lot of time loading it into computers where it might be found by surprise: at exhibitions; internet cafes, which were rare; offices; anywhere we chanced across. Each issue included images, texts, sounds, and interactive works from a range of contributors. Our goal was to test the conditions of the emerging consensus around human-computer interaction, and what the structures of interaction meant. We felt users were being too readily appended to a set of conventions that were becoming fixed parts of culture without the fluidity necessary for something especially interesting to happen.

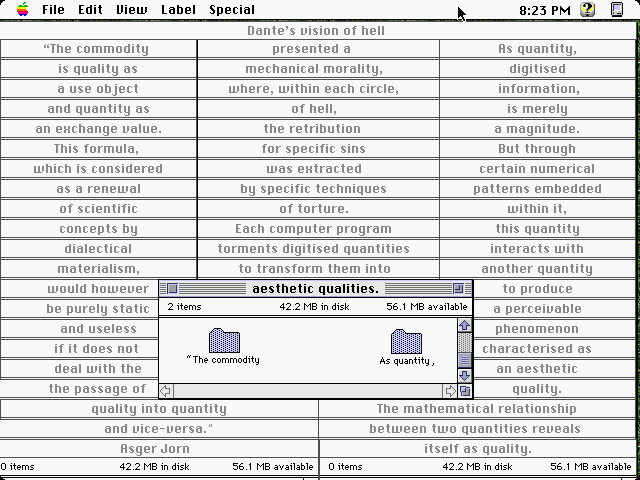

Screenshot of navigable Finder composition by the London Psychogeographical Association from I/O/D 3, running in Mac OS 7.5. Use this work via emulation.

The project moved quite rapidly in the direction of becoming software. By the third issue of I/O/D we had introduced a supplement to the "Finder" element of the Macintosh operating system that would dredge random samples from any text files on the machine and put them in the speech bubbles of moveable figures drawn by comic artist Paquito Bolino. Partially by accident, it turned a story by the writer Ronald Sukenick into something like a virus that would rename a few files on any computer it was loaded onto with fragments from his text. We were also quite interested in the question of hype, of working with the opportunity of the unpredictable "new world of computing" to make work beyond people’s expectations. There was a sense that a change in media systems meant that they were as yet undetermined, and that aesthetically charged invention might be able to tip the movement of their development in some interesting directions.

In our fourth and final issue, we shifted focus from the desktop to the web, which was beginning to reach a broader public, though in London, as elsewhere, most internet access was still via dial-up modem. Artists were responding to its development, often rightly working with incoherence to test the too-ready assumptions that defined the internet as a medium of communication early on. However, we felt that there was still an implied acceptance of the aesthetic norms of the browser; for instance, that the browser was based primarily on the design for paper, emphasizing the single page as a coherent unit, rather than the connections amongst files.

The web was based on a structure of links. The patterns of connection of those links revealed the "native" power structure of the web. Today, people speak of certain websites operating as "gravity wells," where links to the outside are absent. Most such sites have links generated by scripts that refer to aggregates of content from databases, making them distinct from the hand-coded HTML documents of the 1990s, but the incipient tendency for large-scale sites to become hermetic and self-referential was already there as the flow of users and attention was beginning to become a valuable commodity in itself. We also saw that many sites were making users jump through hoops, such as pop-up ads or branded splash pages, before getting to any interesting material.

This tendency to silo the web page and contain the flow of users also affected the design of browsers. At that time, the battle among a small number of companies to determine the standards of the web and reap the rewards, imaginatively known as the “Browser Wars,” was in full-swing. Fault lines appeared between websites that were optimized for the unique features of Netscape and those best viewed in Internet Explorer, with the companies developing each program always edging towards breaking the web by introducing novel features to undermine their competitor and get greater market share.

Our approach was to try to develop another order of interaction, one that was not content with what it might be presented with, that would try and look behind the assembling of smooth surfaces and into the plumbing. We were also interested in doing so by reconnecting to the imperatives of Constructivism, moving across, art, design, and everyday life by making an object for direct use. The aim of the fourth issue of I/O/D, The Web Stalker, was to create a way of interfacing with the web that foregrounded some of the qualities of the network sublimated by other software. We wanted to develop an approach that would privilege fast access to information, and the ability to look ahead of the structures that were presented to users as well as to map the idiomatic structures of sites. We wanted to embed critical operations in software, but by forcing critical ideas to become productive rather than simply being aloof and knowing.

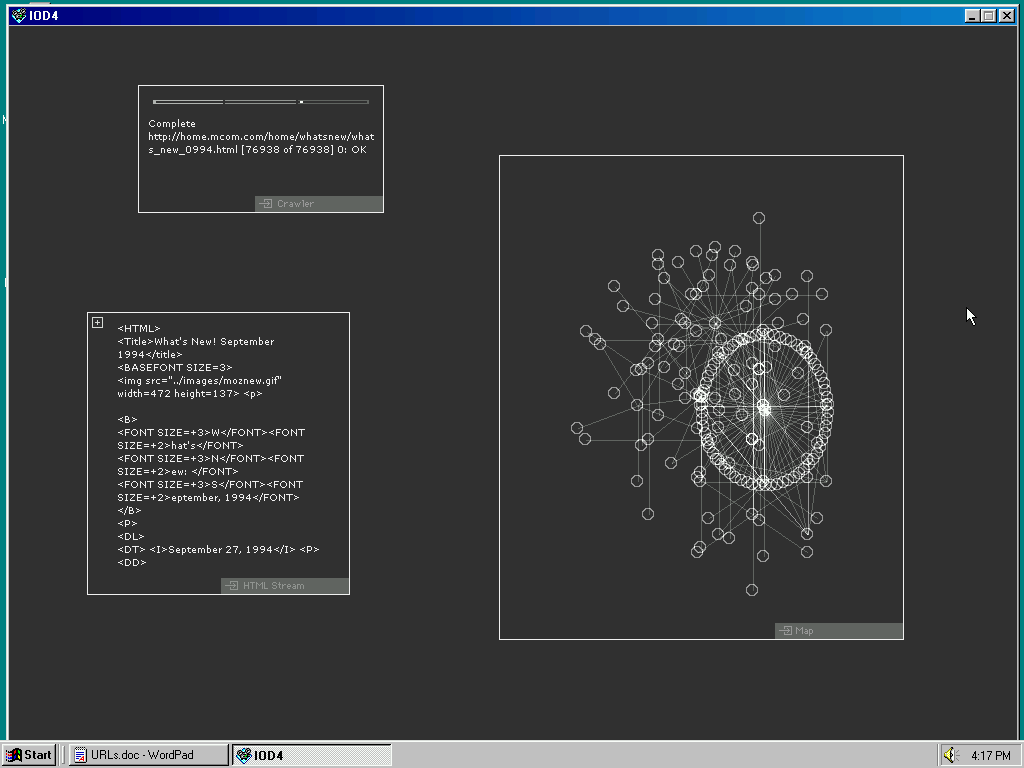

Screenshot of I/O/D 4: The Web Stalker

To these ends, I/O/D 4: The Web Stalker was a new kind of web browser that decomposed websites into separate sets of entities. The texts of the site were treated as the primary resource, but were stripped of most of their formatting. Links from one file to another were mapped in a network diagram, which allowed users to visualize their path through the clusters, skeins, and aporias of files. This Map built dynamically as a Crawler function gradually moved through the network. We saw the logical structure of websites, established by the links in and between them, as another key resource, and we wanted the software to act in a modular manner, with users calling up functions, each with their own separate window, only when they needed them. The operations of the software were minimal and precise. At this point in time, images (introduced to the web via the Mosaic browser) were often seen as too heavy to load via dial-up, so we simply ignored them.

The software was published online, where we gladly joined in with the Backspace server and space run by James Stevens. This was the site of numerous great projects and events and a meeting point for artists, hackers, "slacktivists," designers, and others. Our wager was that it was possible to reroute the excesses of hope and hype in those days away from the emerging and regressive norms they were being channelled through, if only people were able to encounter something more exciting. To encourage this, we also published and distributed thousands of stickers and hundreds of posters that found their way onto lampposts and (then amazingly novel) laptops. The software was made available on CD-ROMS of cheesy shareware on the covers of computer magazines, alongside distribution via art and design exhibitions and publications. The project speculated on, and aimed to provide the grounds for, the possible reinvention of a media system at the moment of its massification. It needed to be spread as widely as possible, and we were thrilled that people started taking it up in their own ways.

The analysis of websites via their links proved to be a powerful approach, one that was developed in parallel by a pair of students at Stanford University who have subsequently played quite a role on corralling and dominating the regimes of connection through Google. The Web Stalker in turn contributed to the development of the understanding of software as an explicitly cultural and social force, and it is in its capacity to elicit thought and further invention that it provides a possibly interesting specimen.