Printed scores were once necessary for music listening. Until the 20th century, each musician playing a symphony would need his own notated sheet music in order to play a piece for every performance. Today, the bulk of music listening happens through recordings. Musicians only need to play a song correctly once in order for anybody to hear it anytime, anywhere.

But with the streamlined dissemination of digital music on the Internet, today’s listeners need guidelines for how to consume music just as badly as musicians once needed scores to produce new music. There is simply too much recorded music for any one person to keep track. Accordingly, “music discovery services”, which guide listeners through huge libraries of music, are beginning to emerge as a genuine growth industry.

Pandora, a leading music discovery service, famously began its Music Genome Project about a decade ago, a music classification method that numerically rates songs according to a long list of criteria and sorts songs by these “genetic” similarities. Pandora’s website generates playlist suggestions based on a minimal amount of input from listeners. Ideally, Pandora automatically can create personally tailored playlists that a listener didn’t have the knowledge or time to create.

Shortly before the Music Genome Project commenced, George Tzanetakis made Marsyas, an open-source toolkit for automatically classifying songs and entire libraries of music, among other applications. Pandora and Marsyas had similar aims - to intelligently sort music libraries to give listeners a way to find new artists and retrieve other qualitative information about music. Working at Princeton as a grad student with professor Perry Cook, who wanted to find a way of automatically sorting radio stations, Tzanetakis developed various library-browsing visualizations within Marsyas, including Genre Meter, which can respond live to sound sources and classify them (video demo.)

Pandora has taken off as a large-scale commercial venture, with more competitors like Spotify and Slacker in its wake. Tzanetakis’ Marsyas has remained known mostly only by academics and computer scientists. Regardless, Tzanetakis’ work addresses issues of music classification in a more radical and even prophetic way than Pandora: all of Marsyas’ “genes” are completely determined by computer automation. Tzanetakis’ contributions to the field of Music Information Retrieval (MIR, for short) have helped to push computers toward increasingly delicate interpretations of one of man’s most elusive forms of expression. Marsyas is available for free download and even has a free user manual.

Though songs in the Pandora database are weighed and sorted by algorithms, a board of experts determines the value of each “gene”. Recently, a New York Times reporter sat in with a group of Pandora’s experts listening to songs and then opining about how high a song scored in criteria like “emotional delivery”, “exoticism” and “riskiness”; as well as more concrete judgements on tempo, instrumentation and harmony.

By contrast, George Tzanetakis’ approach to music classification is completely automated. It needs no panel of experts or crowdsourced participants to complete an intelligently made, intuitively browsable library of music. It works based entirely on the audio signals themselves. Given merely a library of digital song files, George Tzanetakis’ automated classification techniques algorithmically organize songs according to a variety of criteria and present fun interactive ways to browse and compare music.

If the Internet’s dissemination of music continues to accelerate, the world will soon need automated song classification methods like Tzanetakis’ to sort through all the music there is to hear. In light of this problem, Tzanetakis finds a flaw in Pandora’s approach. “I think Pandora is great but has the limitation that it requires extensive manual annotation therefore it will be hard to scale to the entire universe of recorded music.” Indeed, there are major holes in Pandora’s library and there can be no shortcut to its sorting method - while humans will always take a great length of time in analyzing a piece of music, digital analysis is only limited by processing speed. We are arriving at a point when minds like Tzanetakis are necessary.

Marsyas employs an interesting variety of analysis methods. Some techniques are immediately intuitive. For instance, if a song is over ten minutes long, it almost definitely won’t be pop music – it’s probably classical or jazz. The presence of a strong drumbeat probably means a song is likely to be rap or rock. A more esoteric technique could then distinguish rock from rap by finding whether there is a strong melody in the singer’s voice. Frequency analysis can distinguish male and female voices reliably, and can even parse musical instruments very well. When compiled together and cross-referenced against human classification, Tzanetakis’ classification techniques are remarkably accurate and rarely confuse one genre of music for another. (Demo of Marmixer, one of the tools in Marsyas.)

Although some of these analyses may seem advanced, audio analysis is at a much more rarefied point today than when Tzanetakis originated Marsyas. “Query-by-hum” analysis, which is already well established and reliable, can take a melody (say, from a microphone that a user sings into) and identify exactly what song is being sung. One of analysis’ current problems, according to Tzanetakis, is to identify specific vocal performers (say, Tina Turner) automatically based on nothing but the sound file. Tzanetkis’ massive body of published work (many of which can be read online here) has refined a wide variety of analysis and classification techniques.

As Tzanetakis has added analysis and visualization tools to Marsyas, other developers have employed Marsyas and added to it as a community of similar interests. One application that clearly compares Marsyas’ powers to Pandora’s model is Doug Turnbull’s CAL500, a website with 500 streaming mp3s that have been automatically classified using Marsyas. These computer-generated “Predictions” are placed in a column right next to human-generated “Ground Truth” tags. The computer-generated tags make concrete observations about instrumentation and tempo, but also confidently judges the emotional qualities of music and in what settings the song is appropriate to play. Although there are some differences between the two columns, there is also a remarkable amount of overlap; the site is a wonderful snapshot of MIR’s adolescence and promising future.

Another recent proof-of-concept came with a smartphone app Tzanetakis helped develop called Geoshuffle, which automatically generates playlists based on signal analysis, minimal user input and even GPS information to determine the user’s environment (for instance, two different playlists would be generated during a commute to work and when sitting at work). Upon trial uses, Tzanetakis found that listeners did not often skip past tracks in Geoshuffle’s playlists. Though some elements of this invention are rough, the implications are profound: Geoshuffle can play songs you’ve never heard before and you’ll probably have the patience and interest to listen to almost every one of its suggestions.

A relatively distinct strain of Tzanetakis’ work has dealt with the quantifying various kinds of world music. In a paper on Computational Ethnomusicology, Tzanetakis lays the groundwork for legitimizing and unifying this new scientific field and charts some of his recent advances. Just as with his approach to Western popular music, Tzanetakis commits himself to an approach of ethnomusicology relying on an analysis of the audio signal itself.

Reprinted from the Journal of Interdisciplinary Music Studies

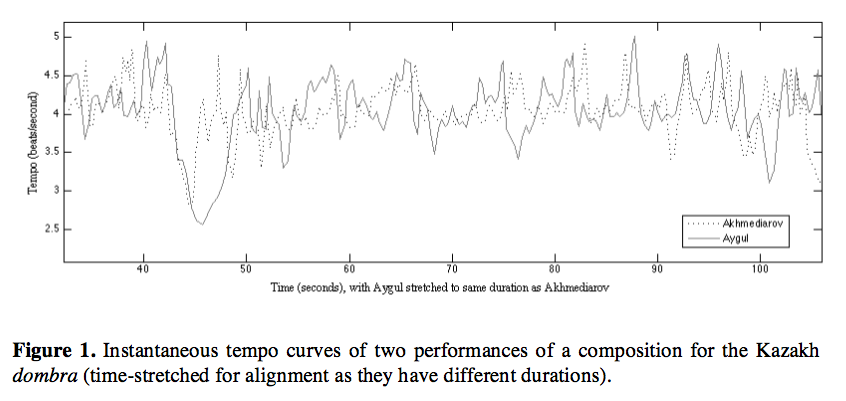

One powerful example of Tzanetakis’ use of digital analysis is with his analysis of rhythm. Rhythm in various kinds of world music has long posed a problem for musicologists, either because their use of rhythm is so complex or because rhythm takes a fluid form. For instance, Dobra playing from Kazakhstan is noted for its rapid shifts in tempo. Using a tempo detector with an extremely fast rate of recognition, Tzanetakis was able to graph the complex rhythmic changes of a performance – such a graph could be used to teach a computer to reproduce the very fluid, intuitive rhythmic feel that would have been impossible without digital analysis. In the past, ethnomusicologists attempted to chart rhythm on analog tape by physically marking rhythm on the tape itself. Tzanetakis’ digital analysis laps these techniques by instantaneously analysing

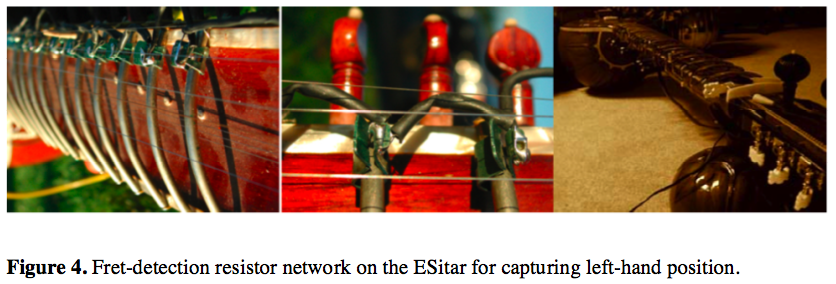

Another interesting application of Tzanetakis’ digital approach to ethnomusicology quantifies the gestures and handmovements of a sitar player. Tzanetakis was involved in making the “E-Sitar”, which converts the hand motions of sitar players into binary code, so that subtle differences in sound are directly mapped to the hand movements of the player. In addition, the sensors placed on the sitar can be used to trigger computer music for accompaniment purposes.

The ESitar maps hand movements to the audio signal produced

In addition to ethnomusicology and MIR, Tzanetakis’ audio tools have even proven useful for research on Orca calls, teaching robots to dance and searching through news archives. But while advanced digital analysis has been the engine for Tzanetakis’ powerful analytical tools, he pauses from suggesting that all music or all analysis should become digital. “The digital analysis is just a tool no different than a score or a piano.”

As it turns out, analysis of recorded music’s every feature opens up a realm of reconfiguring and rethinking static pieces of recorded sound, just as a musical score might have been revised for a new performance. “I view automatic music analysis as a way to creatively deconstruct and reconstruct music. It’s a process that has always taken place since the invention of recording. Recording made music a frozen monolithic artifact and only slowly and with a lot of manual effort are we able to erode this idea. I think when anyone will be able to take apart any music piece and recombine it in new ways, that will be the future.”

Nat Roe is a DJ with WFMU and the editor of WFMU's blog. He has contributed in the past to The Wire, Signal To Noise and the Free Music Archive. Nat also cooperatively manages Silent Barn, a DIY venue in Ridgewood, Queens. As a sound collagist, Nat makes use of cut-up music libraries, notably with his recent compilation piece "Adult Contemporary Redux", which is available for download on Ubuweb.