MIT researchers have developed a hands-free and eyes-free system that allows people to find information about objects without having to actively scan them, use a keypad, or a speech interface.

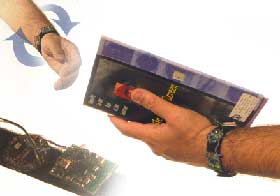

The ReachMedia system consists of a bracelet that reads RFID tags to detect objects the user is holding, an accelerometer to detect hand gestures and a cell phone that connects to the Internet, plays sounds when objects and gestures are recognized, and provides audio information about the object in hand.

A person could, for example, pick up a book to search for reviews of the book online. She would hear a sound from her phone indicating information was available about the book, and would use gestures -- a downward flick and right and left rotation -- to select or go to the previous or next menu item of available information.

PDF of the project by Assaf Feldman, Sajid Sadi and Emmanuel Munguia Tapia.

Via TRN.

Originally posted on we make money not art by Rhizome